Cache Mapping Function

First, let's understand what is Memory Management Unit and how it works. Actually Memory management unit(MMU) is a hardware component or a part of the computer's central processing unit(CPU) that is responsible for memory management by CPU. The major function of the memory management unit is to convert virtual memory addresses into physical memory addresses. That converted address (Physical memory address) represents the location of the primary memory(RAM). after getting an idea about the MMU let's see how it works.

we can label 2 levels in the memory hierarchy as "Primary Level" and "Secondary Level". for example, if we take 2 levels of the hierarchy, the Primary memory (RAM) level and the Secondary memory ( HDD / SSD ) level. The primary level is the RAM and the secondary level is the HDD between these 2 levels. Likewise, between the cache level and primary memory level, the Primary level is the Cache level and the secondary level is the RAM level. Always the level should be the primary level which has the highest priority among the selected levels of the memory hierarchy.

Cache Memory Organization

Here we will learn how cache organizes and store incoming data. Basically, the CPU request data from the CPU register, if the requested data is not available in the registers, then it checks the data in the cache, likewise, it checks data in primary memory(RAM), Secondary memory(HDD), and other levels respectively. When the data comes from the primary memory(RAM), first it checks whether the cache is full or not. According to these 2 cases, cache memory uses some different methods to store the data.

- The criteria used to place, in the cache an incoming block from the main memory (In-Place)

- The criteria used to replace a cache block with an incoming block ( on cache full (Technique)

Place in cache techniques

Usually, there are 3 techniques to place data in cache memory.

- Direct Mapping

- Fully Associative Mapping

- Set Associative Mapping

Direct Mapping ( many to one )

It places an incoming main memory block into a specific fixed cache block location.

ex: ) Main memory consisting of 4k blocks. Cache memory consists of 128 blocks. block size 16 words.

This diagram shows how the blocks are allocated.

In this main memory, there are 4096 Megabytes. That means there are 4096 memory blocks indexed from 0 to 4095 as you can see in the above table. Also, there are 128 cache blocks. So 32 main memory blocks are mapped to one particular cache block In the above example,

0, 128, 256 ........ 3968 blocks are mapped to cache block 0. So one of that index only can be stored in the cache block 0.

In this direct cache mapping technique there is a tag table that holds the index of the particular memory block and only the index of the mapped block can be stored in the particular tag block. The cache block holds the data of that particular index.

Advantage:- simple and easy to implement

Disadvantages:- Since all the memory blocks are mapped into cache blocks, even if there exist any other empty cache blocks, that can't be stored. That means each cache block can hold the data of mapped memory blocks only.

So low cache hit ratio occurs.

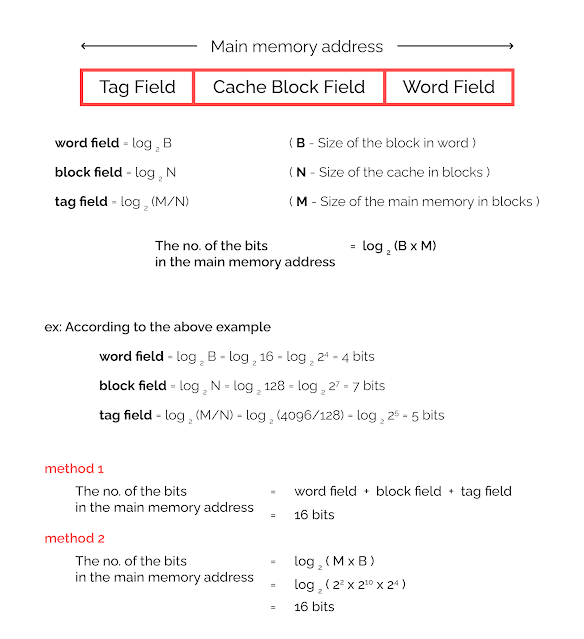

in this technique, the address interpreted by (MMU) :

Fully Associative Mapping

Rather than the direct mapping technique, In Fully Associative Mapping an incoming main memory block can be placed in any available cache block. That means like the direct mapping there are no mapped blocks, So all the cache memory blocks are able to store the data of any main memory block. So that it is an advantage of fully associative mapping when compared with the direct mapping technique.

Advantage:- Incoming main memory block can be placed in any available cache block. No mapped blocks like in the direct mapping technique.

Disadvantage:- Not cost-effective and difficult to implement.

Set Associative Mapping

Rather than the direct mapping technique and fully associative mapping, In set Associative Mapping cache is divided into a number of groups called sets. Each set consists of a number of blocks. To check the cache set to which block "i" maps, use the formula given below.

Now we have discussed the in-place techniques in cache memory organization. Let's move to the next techniques that are used when the cache is full.

Replacement Techniqes

There are 3 replacement techniques in cache memory organization.

- Random Selection

- First in First out (FIFO)

- Lease Recently Used (LRU)

Random Selection Technique

A random number generator generates a random number between ( 0 and the number of cache blocks - 1 ). Based on this number the cache block is selected and done the replacement.

Advantage:- Simple and doesn't require much additional overhead.

Disadvantage:- Doesn't take locality into consideration.

NOTE: First usage ---> Intel iAPX microprocessor

First in First Out (FIFO) technique

In the FIFO technique, the cache block will be replaced by considering the time spent by a cache block. The cache block that has been in the cache the longest is selected for replacement by assuming that block is no more needed. One of the complexities of this is it needs to keep a record of time spent by each cache block.

Disadvantage:- Doesn't take locality into consideration and is not simple and not easy to implement.

The Least Recently Used (LRU)

In the LRU technique, the cache block replacement is done by considering the history of cache block usage. To keep track of reference to all blocks while residing in the cache it will use a cache controller circuit.

Each block is associated with a counter that is incremented every time the block is accessed. When a block need to be replaced, the block with the lowest counter value is replaced.

In conclusion here we provided a complete guide to cache mapping functions and techniques. Let's explore more about Computer organization in the next post. 🍏🐝